This weblog post continues the story about men’s sheds. See: https://brock.mclellan.no/2019/03/03/mens-sheds/ Here, the focus is on how a shed can be used so that men can be of service to their community. As this current post is being written, a makerspace is being constructed in Inderøy, and I am looking forward to it becoming part of a world-embracing network. Locally, there are many target groups for this workshop, including that group of men who need a men’s shed. Another target group consists of pupils at Inderøy lower secondary school. Even the local Friends of the Earth group is intending to use the workshop to make controllers for a 15 square meter geodesic dome greenhouse, equipped with hydroponic gardening facilities. Full disclosure: The author is the chairperson of the Inderøy chapter of Friends of the Earth.

Purpose

People like to be of service to others, as long as they are treated fairly and with respect. At a men’s shed, it would be helpful if participants knew the type of products that people want made, using the technology that is available at the shed or makerspace. This includes, especially, products for people who have special needs, including those who feel they lack the income to keep up with changing pace of technology, or who have unique needs, not normally met with off the shelf components.

With the possession of this information secured, shedders could spend time designing low-cost products, the systems to make them, and the plans to effect their manufacture and distribution. This includes products that can enhance everyone’s enjoyment of life, as well as those that improve the life of just one single person.

While some men may have many of the necessary skill set to design, make and distribute meaningful products, others may have only some or even none of the skills. So a first step is to assess the totality of skills possessed by the men’s shed group, the specific skills each person wants to learn, and what each person wants to do with his current and future skill sets. Just because someone is an expert, or best in a group, does not mean that that person should be selected to do that specific job. Perhaps they should teach others, or learn new skills, or improve old ones.

While the current focus is to get a men’s shed up and running in Inderøy, the great thing about open source development is that development can be forked, separated into two or more branches. Locally, my interest is to ensure that people with mobility issues can have men’s sheds close to them, including in hamlets such as Mosvik (20 km from Straumen) and Beitstad (20 km from Steinkjer). In addition, I am hoping that some of these designs/ products can be of interest to men living further away, so that people can work together on them, regionally or internationally.

This requires complete documentation of each and every project, so that they can be localized. Localization is techno-speak for translating a project into the culture of a different area. For example, a project originating in the Americas, may have to have all dimensions and components metricized for use in Asia. Tools that may be legally used in USA, may be illegal in the EU and Norway, so that substitutes may have to be found. Localization becomes much more than a linguistic translation.

Struggles

At a men’s shed many different projects will be presented for the contemplation of participants. Some will be so simple, that a single person may be able to start and complete it in a matter of minutes. Others may require the efforts of many different people, over a longer period of time. When several complex projects are available, it is important that the men’s shed community, be able to prioritize, even reject. Sometimes some projects can demand skills that are not available. Sometimes they are too long, or require too many people. Regardless of the project, there must be an overview giving a reliable project timeline for people with specific skills sets, as well as other resources that are needed. In other words, one needs a project plan.

With a project plan one knows where to begin. Yet, not all projects will begin at the same place. The Open Builds project, tracks a large number of technical projects, many equipment related. When a new person or group builds a new iteration of a project, improvements can be incorporated. Again, some solutions are simple, others are incredibly complex. Fortunately, because many people throughout the world document these open source solutions, reinvention is unnecessary, Instead one can often make a generic product directly, or adapt it for a specific user.

Life can be a struggle. As trust builds in a men’s shed community, people will gradually, perhaps even reluctantly, share insights into what they are struggling with. Sometimes people need to be alone. Sometimes they need to work alone. Sometimes they need to work alone in the proximity of others. Sometimes they need to work co-operatively (but silently) with others. Sometimes they need to work co-operatively, while talking shoulder to shoulder.

This design and make process is not always easy. Many people have special needs, and insights into solving their own struggles. With a little help, they should be able to transfer those insights over to other people. Yet sometimes, indeed often, this doesn’t happen. One major reason is that mental health issues, such as anxiety or depression, divert attention.

Not all product development will deal with rocket science or cutting-edge technology. Much of it will simply involve skills with traditional equipment that shedders have used before, and feel comfortable with: Woodworking/ carpentry tools and blacksmithing/ metalworking/ welding tools. People who feel comfortable in this analog world should be encouraged to remain there, if this is what they want.

On the other hand, if they want to enter the digital world there should be a place for them there too. Much digital work at an introductory level, simply involves the copying of files, and the running of those files on a 3D printer/ CNC mill/ laser cutter, etc.

At intermediate levels, there may be a greater mismatch between the skills that are needed, and the skills that people have, so that additional training may have to be offered.

A great many different equipment related projects can be found at: https://openbuilds.com/

Sometimes experts will undertake the druggery necessary to bring a complex project to life. The NeuroTechnology Exploration Team lab at Rochester Institute of Technology, Henrietta, NY provides an example of how technology can be developed, then transferred throughout the world. Brain-computer interfaces (BCIs), where an individual controls computers and other devices using only their mind, is a rapidly-expanding field with a wide range of potential applications. BCI devices are especially desirable as assistive technologies for those with impaired motor or communicative capabilities. Everything the team uses in their projects is sourced and produced as cheaply as possible. The technologies used are noninvasive, relying either on electroencephalograms attached to the scalp or on localized muscle contractions, to convey signals to the computers and devices. The software is open-source and can be downloaded to any computer. For further information see: https://reporter.rit.edu/tech/brain-computer-interfacing-comes-rit

If open source solutions aren’t available off the shelf, a client may have to be open about his or her struggles to start the design process. This normally requires interaction, so that insights can be transferred, then developed and applied to specific problems. However that interaction does not have to be face to face. Shelagh McLellan’s bachelor degree project, On Trac (2011), was an iPad application that helped facilitate communication between teens and doctors. Teens were often able to communicate things on a tablet, that they would be too embarrassed to say directly to a doctor. For further information, see: https://cargocollective.com/shelaghjoyce/On-Trac

Many of the struggles facing people can be mitigated/ resolved through the construction of some sort of physical device (including clothing) that incorporates mechanical and electrical components, then programmed with software, to do a specific job.

An example

As stated previously, not all challenges are leading-edge. Here is an example of a widespread problem, that has multiple solutions.

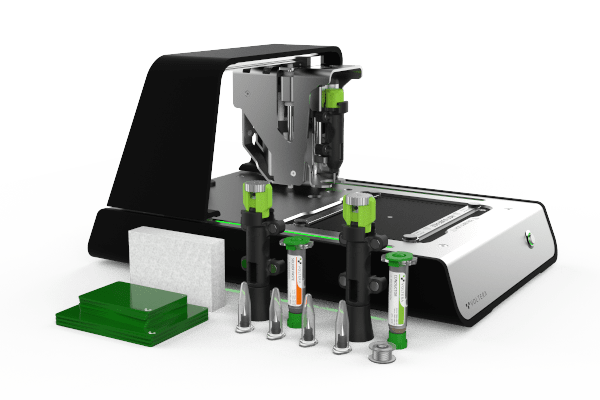

I don’t like the way fruits and vegetables are sold. I dislike other people having the opportunity to handle produce/ vegetables/ fruit that I am expected to eat. I see four solutions to this problem. The first involves seeking psychological help and learning to live with the current situation. The second involves political action to ban consumers from stores and using self-driving delivery vans, packed by robots. The third involves the status quo, which means I leave the shopping to someone else. The fourth, and my preferred solution, is to grow fruit and vegetables at home. There could be many ways to do this, but I am most attracted to building a geodesic dome greenhouse, and equipping it with hydroponic gardening facilities. Personally, I would prefer to spend my time building greenhouses, and hydroponic equipment, than working in the greenhouse growing plants. My hope is to find someone to work with me on this project, someone more interested in growing and tending plants, perhaps the same person who currently does my shopping. This is the same solution that is being explored by the local Friends of the Earth chapter in Inderøy.

Poverty

One issue that cannot be ignored is that of poverty. Many of the challenges people face are caused by being unable to afford the products that will solve their problems. There are different degrees of poverty. Extreme poverty can result in emaciation and homelessness; more moderate poverty results in obesity and sub-standard living conditions. People put on weight, because the food they need is just too expensive. Thus, they feel they don’t have the economic freedom or economic opportunities that they would like. Some younger people feel that they don’t have the opportunity to purchase a house, and will end up being life-long renters, or worse. Some older people feel that they do not have the resources to buy even necessities, such as heat, because energy cost too much.

Insight

I don’t want to know anything about a client/ readers’ personal situation. That is a private matter. If someone wants a men’s shed to help with a struggle please wait until a men’s shed is established, or (help) start one yourself.

Here is the information I think a men’s shed would need to know in order to work on an extensive project for a potential client. A simple one or two day project involving a few people making something simple, does not need this level of detail.

- Please describe the client in general terms: Approximate age and gender; living environment – urban or rural, living alone or with others (yes, dogs are included in the others category); type of housing and area; income source such as part-time or full-time employment, pension, reliance on savings, etc. This helps the men’s shed understand the client’s circumstances.

- What high priority physical, emotional or social challenges is this person facing? Please try to describe them in as much detail as necessary.

- What are the economic implications of these challenges?

- What solutions does this person envision that will assist her or him to resolve or mitigate the challenge?

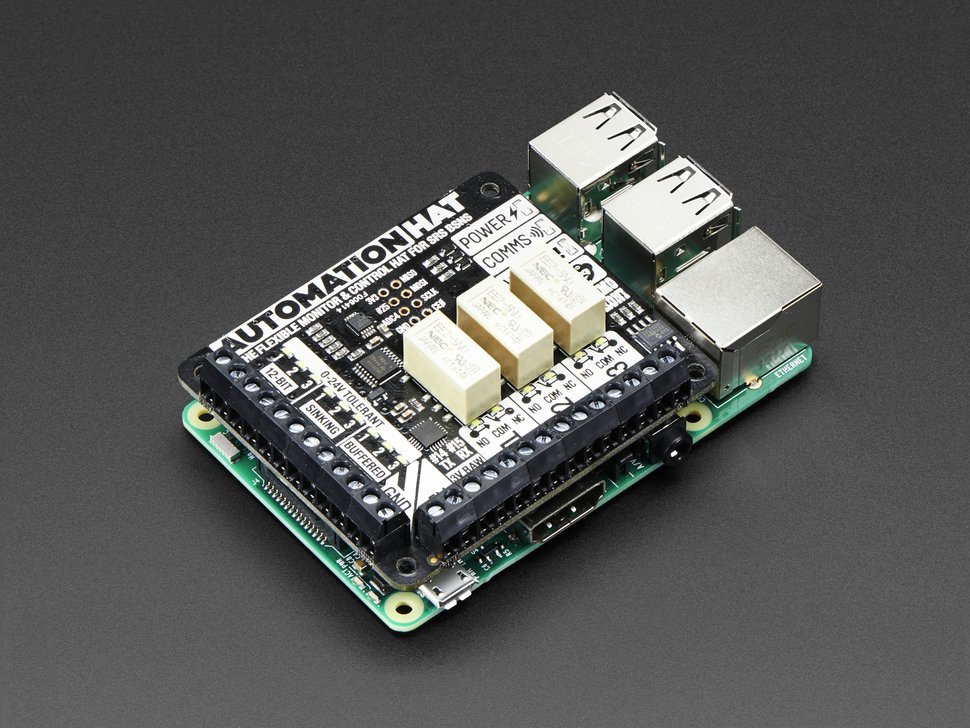

Many proposed solutions will involve the integration of smart house or internet of things technology into a residence or workplace. If so, it may be appropriate to solicit additional information. It has been my experience that many solutions are device dependent. At the same time clients are unable to use new or different devices or technologies. This is why in question 1, such specific information is requested.

- What devices is the client using? Phone – clamshell, smartphone; other personal devices – tablet, laptop, desktop; servers; input devices such as keyboards, mice and scanners; output devices, such as televisions, screens and printers; Everything else that is hooked up to the internet with a cable, or without. If you have any idea about make and models and features, that would be helpful, as would any prices actually paid – new or used.

- What is this person using these devices for? This is an important question, and arbitrary limits should not be put on it.

- What communications and related services are being purchased/ provided? How are they being delivered? What do they cost? For example, some people have a landline incurring a monthly charge; some people are visiting coffee shops to use wifi connections; some people have cable television and/ or broadband and/ or dial-up internet and/ or alarm systems and/ or ???

- What would this person want to use devices for, if a device had the necessary attributes, and service providers made services available either free, or at an affordable price? The essence of this question is, what does this person really want from his or her devices?