There are many different types of environmentalists. Most people’s involvement in environmentalism does not involve a full range of issues. Instead, there is a focus on just one, or a few. For example, some people are focused on nuclear energy, or policy decisions on bears and other carnivores, or preservation of the arctic fox.

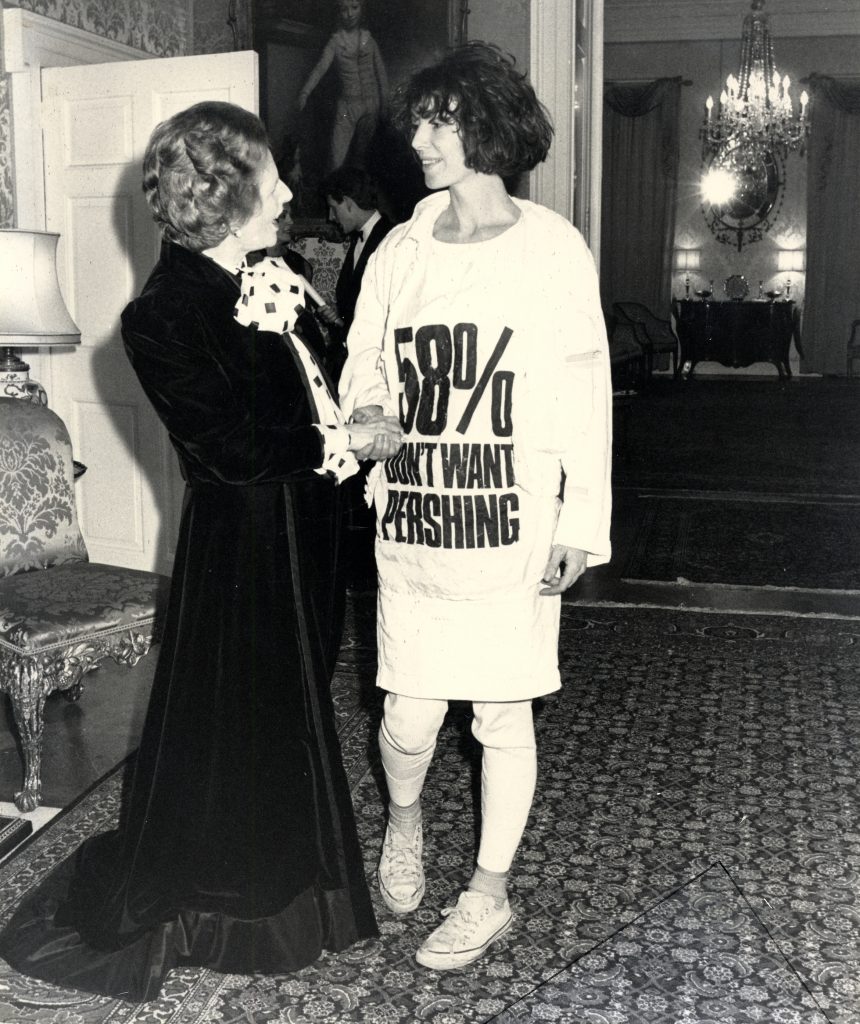

For many, their most distinguishing garment is their hiking boots. Others are more comfortable in a lab-coat. There are even people who prefer tailored suits, to cavort with members of political/ business elites. Fortunately, many times increasingly more people simply wear their ordinary school clothes to protest outside their favourite democratically elected assembly each and every Friday. Personally, I feel most comfortable outfitted in protective clothing suitable for a workshop. One can never be quite sure what type of clothing evokes the best environmentalist image, except to refer to the stunning success of Katharine Hamnett, dressed in a rather long sweat shirt with dress sneakers, at a reception at 10 Downing Street in 1984, which is now 35 years ago.

The reason for all of these different fashion statements, is that people have their own individual environmental fashion style. Personally, I see a need for a flora of environmental organizations, each with their own approach. To help people understand this concept better, I’d like to use religion as an analogy.

There is a large segment of the population in Norway who are active – but more likely passive – members of a Lutheran church, still often – but incorrectly – referred to as the State Church. Many immigrant families are members of the Catholic church, while other immigrant families are members of a wide variety of Muslim organizations. There is also a variety of other religions, associated with other faiths.

Membership in a religion involves a two-fold declaration. First, a potential member must hold a minimal set of core beliefs that are known in advance, and the religion must then be allowed to adjudicate that person to determine if that person meets its membership requirements. It is insufficient for a person to make a declaration that they are Jewish/ Christian/ Muslim/ Baha’i, and for the particular religion to be required to accept that person as a member.

Bridge building between the various religions is not undertaken by having every religious person join an ecumenical organization, and then allow decisions to be made through democratic voting procedures. That would result in a tyranny by the majority. Instead, the different Faiths/ denominations become members, and areas of common interest are developed through consensus. There will, of course, be areas where these organizations agree to disagree.

My experience of Friends of the Earth, is that it – like the Church of Norway – has a large number of passive members, who pay an annual membership fee more out of guilt, than belief. Yet, it is also resembles The Council for Religious and Life Stance Communities, hoping to foster mutual respect and dialog between a variety of environmental perspectives, and working towards their equal treatment.

The Norwegian name of Friends of the Earth is not Verdens Venner or even Jordens Venner, as could be expected with a literal translation. Instead, it is Naturvernforbundet, which is officially translated as the cumbersome, The Norwegian Society for the Conservation of Nature/ Friends of the Earth Norway. For linguists, one could cryptically add: natur = nature; vern = protection; forbund = for-bund = together bound = federation/ association/ society; et = neuter direct article = the, which is put at the beginning of the phrase in English. Note the general absence of norsk (adjective, not usually capitalized) = Norwegian, or Norge (noun, in Bokmål, spelt Noreg in Nynorsk or New Norwegian) = Norway. However, the name sometimes begins with Norges (possessive noun) = Norway’s, if there is a need to distinguish the organization from something in other countries.

Because of the structure of Friends of the Earth, there is no need for the organization to build consensus. Instead, individuals can position themselves to become representatives attending bi-annual national meetings, and voting on policy decisions. In this internet age, this 20th century approach means that a determined few, can decide policy that could be offensive to a more passive majority.

Some of the more radical and active members are able to capture the votes of this passive majority, and to use it to change/ uphold policy decisions. What appears to be consensus, can be more properly be described as a tyranny by the few. This problem can be remedied by replacing a representative democracy, with a direct democracy – one member, one vote. This is attaining using today’s internet technology.

Unfortunately, Friends of the Earth cannot be both dogmatic and ecumenical at the same time. If it opts to take a more ecumenical approach, then instead of communities of Buddhists, Hindus, Humanists and Sikhs (all groups not mentioned previously), there would be place for different views of environmentalism: field naturalists, species preservationists, workshop activists, to name three. Each group would then be allocated an agreed upon number of council members. A (bi-)annual meeting would appoint a board, which again employs a secretariat, and the organization would work towards consensus building.

Despite my role as leader of Friends of the Earth, Inderøy there are days when I contemplate leaving the organization. It is related to one significant flaw with Hamnett’s photo (above), and that is the negativity of her message. One never wins friends by telling people what not to do. Instead, there has to be a positive message that can be periodically reinforced.

Friends of the Earth, Norway, is on the warpath again against imported plant species, including those grown in private gardens. Instead of making positive suggestions to grown some under-rated, beautiful, endemic species, they want to induce guilt in people who chose immigrant species.

I think, in particular of the sand lupine, Lupinus nootkatensis, which thrives on sand and gravel-containing areas, growing to about 50-70 cm high. The species name originates from the Nootka Sound in British Columbia, Canada. It is a place I am intimately familiar with. The species was first listed on the Norwegian Black List 2007 (SE). Yet, the species came to Norway with The Norwegian State Railway (NSB), which used it to tie the slopes along the then (1878) newly constructed Jær Line, south from Stavanger for almost 75 km to Egersund. From there, the plant has spread along the railway and the road network to large parts of the country. Today, it is found in 16 of the country’s 19 traditional counties.

The species started its expansion from Jæren in the Southwest. It was observed in Stjørdal in 1911, which means it has been found in Trøndelag for at least 108 years. In a very short period of time, lupins grow densely, and where not limited by droughts, large, barren areas can be reclaimed quickly because of its nitrogen fixation abilities. It can also extract phosphorus from compounds in poor soils. In spite of these good qualities, it has a tendency to become dominant and overtakes the natural flora. Of course, the reason why lupins were used by the railway, is that there were no native Norwegian species capable of taking on the reclamation duties required: to combat erosion, to speed up land reclamation and to help with reforestation.

The reason for my despair, is that many environmentalists do not seem to understand that the world of 2050 will be vastly different from the world of 1950 or 1850. Unfortunately, many of the species previously thriving in Norway will be totally unsuited for continued life in Norway in thirty years time.

The Crowther Lab at ETH Zürich has examined expected temperatures for 2050, and found that Oslo will experience a 5.6 degree increase in its warmest month, and a 2.2 degree increase annually. This could significantly weaken the viability of many species, including Norway maple, Acer platanoides and strengthen an imigrant, Sycamore, Acer pseudoplatanus, which was introduced to Norway about 1750, and has become naturalized. There are suggestions that the Sycamore is replacing species devastated by disease such as the wych elm, Ulmus glabra, and the European ash, Fraxinus excelsior, which is at its cultivation limit at Trondheim Fjord.

NB Information about Lupinus nootkatensis has been updated. Aparently, it was already placed on the Norwegian black list in 2007.