I prefer to call my smallest computer a hand-held device, rather than a phone. That is because it has to perform numerous tasks, most of which are totally unrelated to making voice calls to another human bean.

One of the conversations I frequently have with myself, has to do with technological choices. Why do people opt for smart devices, rather than their slightly less intelligent siblings? Or, why are people willing to pay a premium for smart devices? An even more alluring question is, why are people willing to pay a premium for dumb devices? This can be a very real situation, especially when the dumb phone in question is a Light Phone. My standard answers have to do with apps. Someone I don’t know and don’t want to know, will demand that I install and use some specific app in order to undertake something, that could be done with a much simpler and more generic, procedure. That someone has mandated the use of a specific app. I need to use that app because I need (want, is usually the more correct term) the service or product being provided. In other words, I opt to make a bad choice, because I want a particular product or service, more than I value my privacy.

Yes, I can give specific examples. Buzz, our daily drive, is equipped with studded snow tires in the winter, the result of driving on under-plowed, but over-iced roads. Some places, such as Trondheim municipality, require vehicle users with studs to pay a stud use fee. We accept this fact. Unfortunately, installing an app to do so is not something that can be done on the road. The information needed to associate a bank account with the app is safely locked/ hidden in our house. It is not taken on road trips. Of course, we would prefer to pay with our bank card, which was possible to do through the end of 2023. However, on a drive to Trondheim during the winter of 2024, the organization collecting the fee had removed the option to pay with a bank card, and wanted to collect additional information about us. As this situation arose about 120 km away from Cliff Cottage, our only solution was to: 1) not register for the app; 2) not use an uninstalled app to pay the use fee; 3) risk having our snow tires discovered, which would result in a fine. We were not caught. Since then, we do not drive into Trondheim in the winter. This means that we buy fewer things in Trondheim. Sorry, Ikea!

Paying for electric vehicle charging is another area where it has been possible for the energy providers to demand use of an app. For years, the Norwegian electric car association has been encouraging the Norwegian government to enact legislation that will allow the use of bank cards. This has now started to happen, and all new stations have to provide this option. Established stations will have until the end of 2025 to implement changes.

This is an interesting situation because most stores in Norway are required to accept cash payments. So, if I enter the coop to buy an orange, they are required to accept a cash payment for it. If I then drive across the street to the local charging station, that station is allowed to insist that I install their app, until the end of 2025! The government that is demanding that stores allow people to pay in cash, have managed to create a situation that allows charging stations to avoid cash or card payments!

Apart from a few conversations, some messages and taking lots of photos, there is not much that I want a smart device to do. Yes, I have the capability to use a smart device, but agree with many users who complain that smart devices have become too demanding, especially with abusive social media algorithms.

There is an entire industry developing anti-smart phones. One example is the Light Phone originally launched in 2015 following a successful Kickstarter campaign. A Light Phone II arrived in 2018. They were minimalist devices, with black-and-white displays, but without a camera. In 2024, the Light Phone III emerged, with a 50 M pixel front and 8 M pixel rear = selfie camera. This resolves my main complaint, but opens several new ones. The display is still black-and-white, despite the phone capturing colour images. This means they have to be viewed in monochrome until they are downloaded. There is no headphone jack, a feature I appreciate on my smart device.

More positively, its dimensions are: 106 x 71.5 x 12 mm. Its display is 100 mm with 1080 x 1240 pixels resolution. It has a 1 800 mAh removable battery. Other tools include optional: alarm, calculator, calendar, directory, turn-by-turn directions, hotspot, music, notes/voice memo, podcast and timer features. It provides a near-field communication (NFC) chip, that allows devices to exchange small amounts of data with each other over relatively short distances. It also has 5G connectivity and fingerprint ID.

Yet, I hesitate to enter the dumb phone world. One problem is a 2 Mpixel camera. It is far from the 50 Mpixel camera I am used to. Then there is the under-powered Unisoc T107 chipset, minimal 64 MB RAM and 128 MB storage, expandable up to 32GB via the microSD card slot. Then there is 4G, not 5G.

The Light Phone III is durable, constructed with a metal frame and recycled plastic panels, with an accessible and replaceable battery. The display and USB port are claimed to be more easily replaceable than those on conventional smart devices. When it launches in 2025, it is expected to cost about ten times the price of a Nokia 3210, or about 30% more than the price of my current smart device, an Asus Zenfone 9.

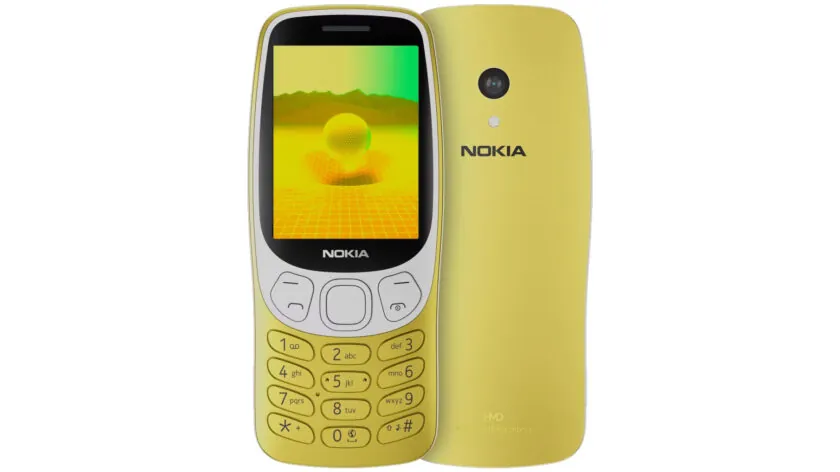

If I were to revert to a dumb device, I hope it would be a more stylish Nokia product, with its origins in Finland. Unfortunately, this device is targeted towards younger users, those under 16, who are likely to be banned from taking smart phones to school.

The revived Nokia 3210 was relaunched in 2024. The original Nokia 3210 was designed by Alastair Curtis (? – ) in Nokia’s Los Angeles Design Center. It was released in 1999, and became the 7th most-sold phone in history. The new phone has been restyled , appealing to dumb phone fans. Its dimensions are: 122 x 52 x 13.1 mm. It offers a 60 mm 240 x 320 pixel, in-plane switching (IPS) display, characterized as having the best colour and viewing angles, a USB-C connector for data transfer and charging, 4G connectivity, Bluetooth support, and dual-SIM capabilities. It runs Series 30+ based on Mocor OS, a proprietary operating system, and has Cloud Phone technology support. This means it is capable of accessing YouTube, and Google Sign-in Services as well as real-time modern web applications. The battery is removable, providing a Li-ion 1 450 mAh for up to several days of service. In terms of price, it is less than 15% of my current smart device.

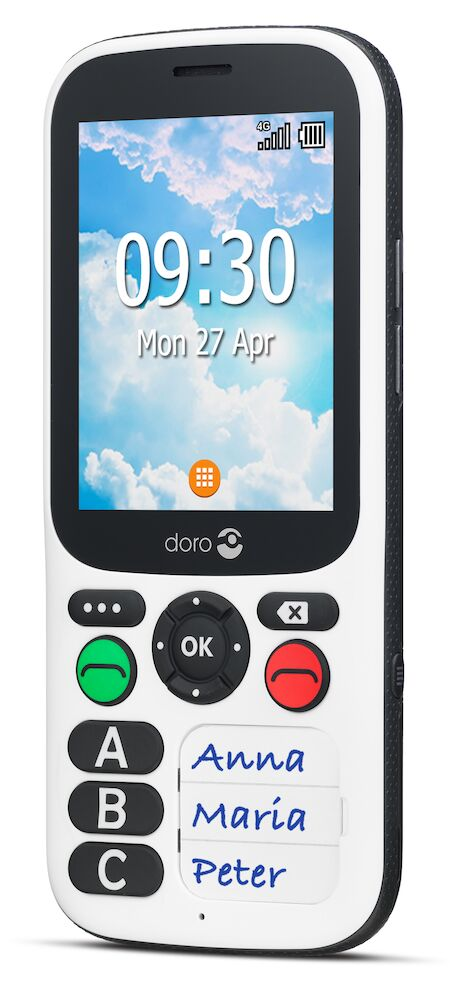

For an old geezer/ geezette, the most likely brand for a dumbish device is probably Doro, with its origins in Malmö, in southern Sweden. It refers to itself as a consumer electronics and assistive technology company. It was founded in 1974 as a challenger to the state-run telecommuncations monopoly, and developed communications products and services for the elderly, such as mobile phones and telecare systems.

The 8080 model is produced for people with eyesight, hearing and motor skill issues. If one is having dementia issues, the 8050 model could be more appropriate. In a smart move, Doro claims that these are smartphones. Yes, anyone can claim that because there are no official definitions about what is dumb and what is smart. However, Doro also produces other devices, including those with a clam shell shape, that they refer to as dumb phones.

Doro’s interface, Eva, is described by them as: patented, intuitive and action-based. Their sales propaganda claims it is: like having someone who understands your needs always by your side. Users never need to look around for things they can’t find. Eva simply gives them a few clear choices, and then does what the user wishes based on their response. She’s also the perfect companion when starting up the phone for the first time, guiding the user every step of the way. And because she is designed by Doro, Eva makes the technology fun, available and easy for everyone, whether new to Android, or a long-time user and fan.

The Doro Quick Start Guide admits that three types of people may be setting up a phone. One can choose between “Yes, I am a beginner”, “No, I have already used one” or “I’m setting the phone up for someone else” .

The phone is 174 x 74 x 9mm in size and weighs 175g and has a 5.7 inch 1440 x720 display with a narrow bezel so will fit into a pocket, handbag or backpack. The screen is large enough to be readable. There is 32 GB of storage, which is minimal for such a device. However, the SIM drawer can accommodate a microSD memory card of up to 128GB. To open the drawer one just uses one’s fingernail. On the top edge of the phone is a standard 3.5mm headphone port while at the bottom is a microphone, a loudspeaker and USB-C power connector.

The display has very clear icons, its brilliance can be adjusted, and there is the option to change the type size. Similarly, its audio can also be optimized for moderate hearing impairment as well as offering hearing aid compatibility.

A proximity sensor turns off the touch screen when the device is held up to one’s ear. Cameras include a 5 MP front/ selfie camera. On the rear is a 15 MP camera with a flash that can also act as a flashlight, as well as a second microphone. There is also a fingerprint sensor. My major concern is that the photo equipment won’t meet the needs of the target audience or, at least, myself.

Also with the phone are ICE = In Case of Emergency, and other assistance features. Personal contact and health data, such as blood groups and allergies, can be stored on the phone so as to be readily accessible. An assistance button on the rear of the phone will initiate a call and an SMS alert, complete with location, to be sent to the programmed telefone number of someone relied upon to respond.

The OS is based on Android 9 (formerly Pie), from 2018. While relatively old, it is also straightforward to use, with most of the bugs worked out. The View button offers a choice of 1) messages, 2) emails, 3) call history or 4) pictures and videos. The Send button takes one to messages, emails, a picture or video or the device’s current location. There are also pre-installed Android/ Google apps: Gmail, Maps and YouTube. Extra apps can be downloaded and installed. All apps are listed alphabetically, while the most frequently used ones can also be displayed on the opening screen for immediate access.

The Doro manual for the device can be downloaded. It provides useful background information and instructions for using apps. While the phone is only available in either black or white, there are cases/ covers available that make the phone more colourful.

Other related products from Doro include: HandleEasy = a seven-button basic remote control for radios and televisions; 3500 alarm trigger = a wristband/lanyard pairable with all Doro mobile phones with texting ability; Tablet = an Android tablet based on the EVA Android interface; Watch = a smartwatch similar to Motorola smartwatches, compatible with Android touchscreen phones; HearingBuds = sound enhancement devices compatible with Android touchscreen phones.

Compromise

It has been difficult to find reliable statistics about dumb and smart hand-held devices. Here are some best guesses from statcounter.com. At some point in 2024, it was estimated that there were about 4.88 billion smartphone users = people worldwide, representing more than 60% of the global population. At the same time, the number of smartphones = devices in use globally was estimated to be about 7.21 billion. It is easier to get percentage data. In 2025-01, more than 72% of hand-held device users, use some variant of Android, while more than 27% use iOS from Apple. This means that far less than 1% use something else. The number of Linux based hand-held devises (including Lineage) is estimated at 0.01%, with Lineage on 1.5 million devices.

The distribution of devices varies geographically. Here are the market shares in % for Android, followed by iOS in 2025-01: Africa = 85.29/ 13.58; Asia = 80.1/ 19.4; In Europe = 65.61/ 33.92; North America = 48.86/ 50.89; Oceania = 47.99/ 50.24; South America = 87.04/ 12.79. So in places like Canada and Australia there are more iPhone users than Android users. Norway follows European trends with a 65.06/ 34.54 split. The poor prefer Android.

One solution is for people to refuse a transition to a new device, such as a Doro by for keeping their existing devices, but updating them with appropriate software. For us that means keeping our current Zenfones, far into the future, potentially beyond the manufacturer’s end of support. There are some sites that claim that support for the Zenfone 9 ended on 2024-07-28. This would come in conflict with European consumer protection rights. So far, there is no indication with our phones, that we have experienced this. I suspect that Asus will not provide us with new versions of Android (we have version 13), but that security updates will be available for at least five years = 2027-07-28, possibly longer.

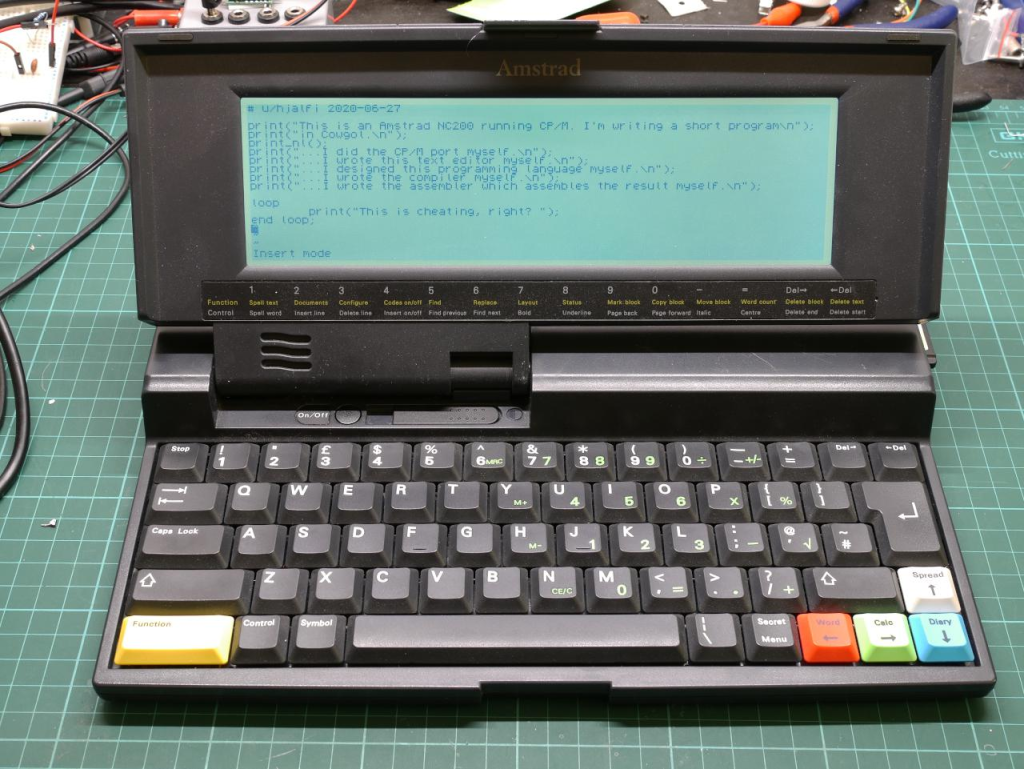

We have two 2018 Xiaomi Pocophone F1s in storage, that could become test mules for new device operating systems. I am most keen to investigate the e.foundation variant of Lineage OS. One could be installed on one Pocophone, along with the Bald Phone launcher. Hopefully this can be set up at Easter 2025 (with school holidays in the period 2025-04-11 to 21). While doing this, detailed plans for transitioning the Zenfone 9s to these variants can be developed and documented. They can then be implemented when an appropriate time comes.

It should be possible to test out basic programs/ apps and to see if they work better with one or the other variant of Lineage. As the Zenfone 9s age, it should be possible to pick up a third one cheaply, that could be regarded as a spare, in case one of the original ones fail.

Admittedly, people have valid concerns about Lineage as a serious supplier of an OS. At best, their 2018 April Fools Day prank was in bad taste, and is still remembered almost seven years later. It should not have happened. The prank involved a request for users to install specific software that most thought was an update. Once installed the device advised that the prank related software could not be uninstalled, and would be used for coin mining, others would profit by. Official apologies were issued on 2018-04-10. A company with so few users can’t afford to offend anyone with any sort of prank.

To appreciate the next section, it can be important to understand the definition of a launcher, in terms of a computing device. It is an app that changes the user interface of a device’s home screen, allowing the customization of the layout, icons, and overall look of the device. It helps people organize apps, access notifications and personalize their device experience.

BaldPhone is an open-source launcher that targets elderly people. A German psychology and aging journal examined responses from over 14 000 participants in the German ageing survey, who were asked at what age someone becomes “old”? People in their mid-60s generally said 75, suggesting that as people approach old age, they tend to push the marker further back. There is a demographic shift occurring in the world, with decreased fertility, and an increasing number of individuals aged 60 and over. Yes, at 76, I fall into that category myself. According to the United Nations, by 2050, the global population of older adults is projected to reach 2.1 billion.

The standard interfaces of popular mobile operating systems—iOS and Android—typically feature small icons, intricate menus and difficult to understand settings. Some people admit that this can be confusing for older people. Others avoid such comments, and simply say that there is a need for a more user-centric approach to device operating system design.

An alternative to buying a new devise is to replace/ update parts of the operating system, such as the BaldPhone open source interface. Its propaganda states that: BaldPhone offers a clean, straightforward layout that minimizes distractions and focuses on essential functions. With large, easy-to-read icons and a simplified user experience, BaldPhone empowers elderly users to interact confidently with their devices.

Key Features: User-Friendly Interface with oversized icons and a limited number of options per screen. An attention to size ensures that users with limited dexterity can easily tap the desired applications.

Customization: Users, other family members, friends or caregivers can personalize the launcher according to the user’s preferences. This adaptability helps instill confidence in users, as they can easily access their favorite applications and communication tools without wading through unnecessary features.

Essential Applications: users can highlight essential applications such as calls, messages, and emergency services.

Accessibility Features: Built-in accessibility tools, including text-to-speech capabilities and voice commands. This functionality is particularly valuable for seniors with visual impairments or those who may struggle with traditional typing methods.

Emergency Services: Quick-access emergency buttons allow users to reach out to their emergency contacts or dial emergency services just by tapping a single button.

Notification Management: Many older people find frequent notifications from various apps distracting. BaldPhone allows users to reduce or eliminate non-essential notifications, enabling them to focus on what matters most.

BaldPhone is built on the Android operating system. Developers claim it combines the robustness of a mature platform with a specialized user interface for older users. The open-source nature of the project allows developers to modify the code and tailor features, fostering a collaborative community focused on enhancing accessibility.

More information about BaldPhone can be found here.

Accessories

Neither a smart (or dumb) hand-held device, can operate alone. In the European Economic Area (EEA = an extended European Union) users no longer receive a charger when they buy a phone. All new phones, even those made by Apple, connect using USB-C. Currently, both Trish and I have chargers that allow charging through four ports: two use USB-C and connect to our laptops and hand-held devices; two use USB-A to attach to other items, including: portable lighting, Trish’s hearing aid container, uses a USB-micro connector. We have connectors fitted with USB-micro at one end, and USB-C or USB-A at the other end. The USB-C standard applies (or will soon apply) to all smaller devices needing to be charged to operate. We bought our two Acer Swift 3 laptops in the same store, the same day. When they came home, they were fitted with two very different barrel jack chargers, despite having power available through a USB-C port (as well as a barrel jack). We believe these charges with both USB-C and USB-A ports will have a life in excess of ten years, meaning they could be the last chargers we acquire.

Most hand-held device users, strengthen their displays with Gorilla glass, or its equivalent. Wikipedia tell us: The iPhone that Steve Jobs (1955 – 2011) revealed in 2007-01 still featured a plastic display. The day after he held up the plastic iPhone on stage, Jobs complained about scratches that had developed on the phone’s display after carrying it around in his pocket. Apple then contacted Corning and asked for a thin, toughened glass to be used in its new phone. The scratch-resistant glass that shipped on the first-generation iPhone would eventually come to be known as Gorilla Glass, officially introduced in 2008-02. Corning further developed the material for a variety of smartphones and other consumer electronics devices for a range of companies. There have been seven generations of Gorilla Glass produced, the latest being Gorilla Glass Victus 2. We have not fitted this type of product to any of our laptops or screens, and all have survived without issue. We have Gorilla Glass Victus (1) on our hand-held devices.

The third accessory that we have on our hand-held devices is a cover. This allows us to store cards with the device, eliminating the need for any other type of wallet. Our covers are in turquoise (Trish) and pink (Brock), which helps identify the specific device, since both devices are black. My covers always wear out faster than Trish’s. Thus, I bought three covers to last the lifetime of the device. I am still on the first cover. Trish has bought just one, and shows very little wear. One always has to be very selective about covers, especially if other needs than just physical protection are to be served.

The last accessory that is useful with a hand-held device is a stylus (passive or capacitive), that acts like a substitute finger when touching a device screen. With a passive stylus, there is no electronic communication between the stylus and the device. The device treats the stylus as a finger. These are considered less accurate than active styluses.

An active stylus includes electronic components that communicate with a device’s touchscreen controller, or digitizer. These are typically used for note taking, on-screen drawing/painting and electronic document annotation. They avoid the problem of a finger or hand accidentally making contact with the screen.

A haptic stylus uses haptic technology = realistic physical sensations which can be felt. Sometimes these can be enhanced by auditory = sound and tactile = touch illusions. A stylus is particularly useful for typing on a miniature keyboard. My fingers are too large for the virtual keyboard provided with hand-held devices.

It is possible to make an inexpensive passive stylus. My starting point is a dead Pilot V-ball 0.5 mm pen, originally filled with liquid ink. Other pens work equally well. Pens regularly run out of ink, making them useless for writing on paper, but ideal as a passive stylus. At my current rate of writing, I am able to construct a new stylus about every three months. It is repurposing, rather than recycling. To distinguish a pen from a stylus, I use white electrical tape around the clear ink tank on the stylus, so that it differs from the pen. My latest intention is to give them away as holiday season gifts.

Note:

Despite my obsession to date people and things, I have been unable to determine when Alastair Curtis was born. However, he received a Bachelor of Science at the Brunel University, then Master’s degree in Industrial Design Engineering at the Royal College of Art in London. He worked as a designer for Nokia starting in 1997. This probably indicates that he was born in 1974 or earlier. He became chief designer, senior vice president and head of the design department at Nokia in 2010. He was chief design officer for Logitech from 2013 to 2024. Logitech’s designs hold great appeal with me, and most of my peripherals are made by them. That includes all of my keyboards, pointing devices, head sets as well as my only desk mat, 30 x 70 cm in lilac! In 2024, Curtis became chief designer for VF Corporation (Vanity Fair Mills in a previous life), known for their outdoor wear, with brands such as The North Face and Timberland. Of which, I use none. This move by Curtis may be related to former Logitech CEO Bracken Darrell becoming CEO of VF Corporation in 2023.